What makes Liquid Neural Networks special?

Artificial Intelligence (AI) has continued to seize the world’s attention, with companies banking on its profitable future. AI has been implemented effectively in many innovations, including self-driving cars, virtual tutors, and even TikTok feeds. As it becomes ever-present, AI has also steadily grown more complex. With new developments, these programs can become more efficient and accurate.

All types of AI function with neural networks. These are multilayered programs that allow a machine to “think” like a human. Nodes, where processing of data occurs, represent the neurons of a brain. Some nodes are on an input layer which receives data, some are located on hidden processing layers, and others are on an output layer to deliver the data (1). A traditional neural network is trained with a stagnant set of training data. For example, if you wanted your model to recognize a picture of an apple versus any other fruit, you would train it on pictures with and without apples to teach it how to distinguish an apple’s characteristics from other objects (2).

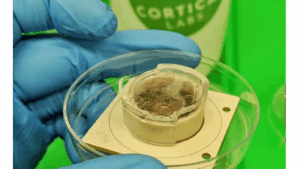

At the MIT Computer Science and Artificial Intelligence Laboratory, postdoc Ramin Hasani, along with Daniela Rus and Alexander Amini, developed a new type of machine learning model called a Liquid Neural Network (LNN) (3). They observed the complexity and scale that neural networks needed to perform tasks and searched for a simpler solution. The team based their LNN on the microscopic worm named C. Elegans which is often found in soil. These worms are studied to understand human diseases. These creatures can perform complex tasks with only 302 neurons, compared to a human’s 86 billion. Likewise, they sought to create a model that would use fewer nodes than a traditional network but still handle convoluted functions to achieve complex actions (2).

Image of a microscopic C. Elegans Worm.

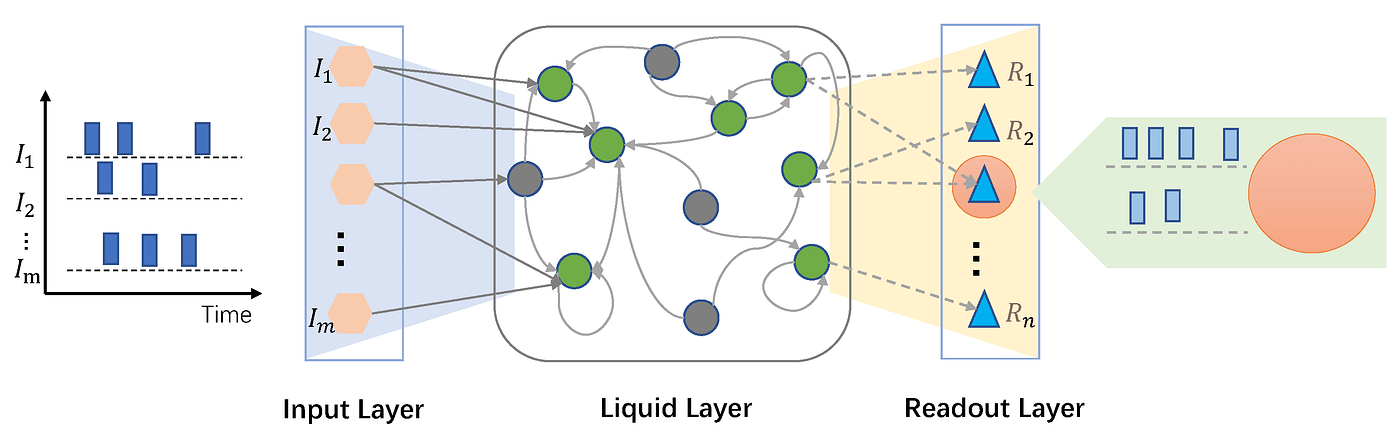

Traditionally, a machine learning model, once trained, will always act the same based on its training inputs. Similar to how a liquid can adapt to fill any container, these researchers created a model that could adapt itself based on new data it is presented with. The processing layers of a traditional model would be replaced with a liquid layer which incorporates time series data. Time series data, compared to images or text that a normal model would use, also contains dated timestamps. This means the model, similar to a human, can observe how the data or images have changed over time and adapt for future iterations (3).

Representation of the layers in a liquid neural network with time series data.

The first advantage of a LNN is how efficiently they can work and be created compared to other models. The MIT lab created an example LNN to drive a car on a simple road, and while a traditional model may take over 100,000 nodes to complete this task, they only needed 19. A traditional program would look for all aspects of the picture, such as the road, the sky, and the trees, whereas a LNN is able to solely focus on the target objectives, which, in this case, is the edges of the road (4). This direct processing can also assist when there are disturbances in the data that the program needs to adapt to, such as in a different environment or weather condition. A normal model may get confused by a stormy sky; however a LNN would still focus on the sides of the road and ignore the unnecessary inputs and prioritizing the objective (4).

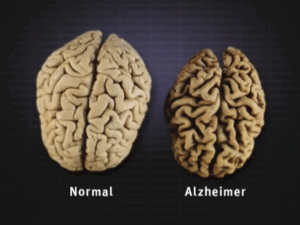

Traditional neural networks are trained with more and more data to account for variance, but this complexity also creates more undecipherable decision trees. When deciding on an output an input may pass through many levels of nodes just like when you make a decision in real life. A decision tree represents each of these decisions. You have to consider each factor such as, the weather, your mood, and the time, but a LNN has less nodes and therefore it is easier to understand precisely where in the process it makes its decision. This makes examining outliers and pinpointing errors much easier (5, 6).

A simple decision tree.

As AI is integrated into more applications that require adapting to the surrounding environment, LNNs will become increasingly vital. Currently, since LNNs are an emerging technology, not many resources have been allocated to push it to the forefront of the AI space. However, once incorporated, these models will play a major role in AI’s efficiency. The time to create AI, environmental impacts, and accuracy will all be improved while also giving us a greater understanding of precisely where a model makes a decision, which is vital to accident prevention (6). While Liquid Neural Networks have not debuted into our everyday lives just yet, they will surely “seep” into the tech we use before we even notice.

Bibliography

- IBM. (2021, October 6). Neural network. Retrieved from https://www.ibm.com/think/topics/neural-networks

- Ackerman, D. (2021, January). “Liquid” machine-learning system adapts to changing conditions. Retrieved from

https://news.mit.edu/2021/machine-learning-adapts-0128 - Boesch, G. (2024, May 17). What are Liquid Neural Networks? – viso.ai. Retrieved from https://viso.ai/deep-learning/what-are-liquid-neural-networks/

- Heater, B. (2023, August 17). What is a liquid neural network, really? | TechCrunch. Retrieved from https://techcrunch.com/2023/08/17/what-is-a-liquid-neural-network-really/

- IBM. (2021, November 2). Decision tree. Retrieved from https://www.ibm.com/think/topics/decision-trees

- Dickson, B. (2023, August 2). How MIT’s Liquid Neural Networks can solve AI problems from robotics to self-driving cars. Retrieved from https://venturebeat.com/ai/how-mits-liquid-neural-networks-can-solve-ai-problems-from-robotics-to-self-driving-cars/#:~:text=Liquid%20neural%20networks%2C%20a%20novel,solution%20to%20certain%20AI%20problems

Images

https://worms.zoology.wisc.edu/research/elegans

https://365datascience.com/tutorials/machine-learning-tutorials/decision-trees

Comments are closed.