How machine learning can be used to communicate with sperm whales

Every person has probably wondered what their dog is trying to say when it barks incessantly at a squirrel, or when a bird chirps violently during a stroll in the park. Until today, communicating with animals has only been possible in fairytales, a mere product of fantasy and science fiction stories. Yet, with the rapid integration of artificial intelligence in translation technologies, interspecies communication is no longer a foreign concept. Just as machine learning has revolutionized human communication, it also shows promise in unlocking the “languages” of the animal realm. Currently, the largest interspecies communication effort in history is the Cetacean Translation Initiative (Project CETI). It aims to discover the underlying architecture of whale communication through machine learning techniques.

David Gruber, the founder and project leader of Project CETI since 2020, became fascinated with sperm whales after hearing the Morse code-like pattern of whale codas. Unlike other marine animals, sperm whales communicate in rhythmic clicks called codas (1). Their unique and human-like nature of communication revealed an opportunity for him: understanding animals. As a wildlife researcher himself, he contacted machine learning experts and whale biologists Shafi Goldwasser, Michael Bronstein, and Shane Gero to partake in the study (1). Together, they formed Project CETI to expand on Gruber’s promising proposition.

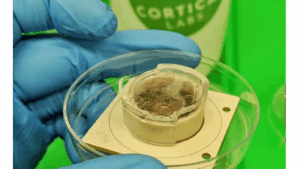

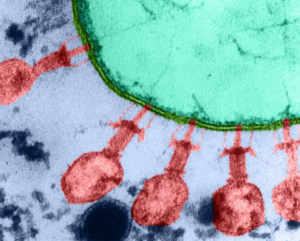

Their first objective was to record billions of samples of whale codas to feed into a machine learning algorithm. Project CETI needed an estimated four billion clicks to produce accurate results (2). To do this, researchers placed listening stations on ocean floors, planted recorders on the whales themselves, and used floating underwater devices named hydrophones to detect ocean sounds (3). Once they amassed enough recorded codas, they used this data to train a machine learning algorithm, allowing the computer to detect and classify various features in a self-learning manner (2).

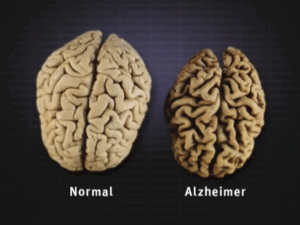

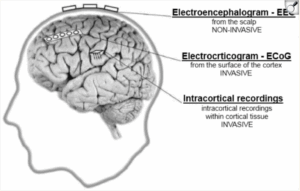

The component that renders a machine learning algorithm so powerful is its use of neural networks, which are collections of interconnected and multilayered nodes inspired by the structure of neurons in the human brain (4). An algorithm is trained by giving the neural network an input, comparing the actual result with the expected output, and calculating the difference between the two. This difference is then fed back into the network iteratively, using mathematical calculations to adjust and refine the network until the difference is minimized (4). Computers use two categories of networks, namely convolutional (CNN) and recurrent (RNN) neural networks. While CNNs are suitable for solving problems involving spatial data, like images, RNNs are preferred for temporal and sequential data, like text and videos (5). To analyze an image, CNNs use mechanisms called filters to detect its distinguishing features like edges, textures, and colors (5). In the first layers of a CNN, the filter is “slid” over the input and scans for matches between the input and filter. This results in a new mapping that indicates areas where the identified features are detected. The feature map is generated repeatedly, detecting only low-level characteristics initially, but more complex patterns in deeper layers (5). RNNs, on the other hand, are useful when the network needs to “remember” previous information and factor it into future calculations. For example, assume an algorithm wants to translate the phrase “It is raining outside.” By the time the model arrives at the word “outside,” the output is already influenced by the word “it” and therefore contextualizes the translation accordingly (5).

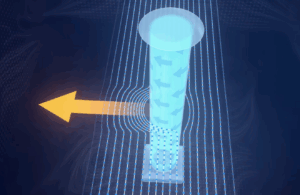

Such machine learning techniques are widely used in Project CETI to advance the study of sperm whale communication. The CNN-based approach is mainly used to construct an echolocation click detector by analyzing spectrograms, which are visual representations of sound frequencies. These spectrograms are categorized according to the presence and absence of a click, using CNNs to label each image as “click” or “no click” (6). Once they find the images with clicks, researchers use RNNs to classify whale codas into categorical types based on their contexts. Currently, they have separated clicks into three sections: standard clicks used for echolocation, buzzes for prey detection, and reverberating slow clicks produced only by males (6). RNNs’ self-reliant nature makes recognizing billions of coda types remarkably more cost and time-effective. With RNNs, researchers can also identify individual whales, different social units, and dialects.

Despite its innovative approach, Project CETI is not the first of its kind. Many studies investigating animals’ perception of language exist, such as Professor Herbert Terrace’s attempt to teach chimpanzees sign language. However, in Terrace’s case, his chimpanzee subjects were merely mimicking motions rather than communicating through them (3). Other studies have similarly overestimated animals’ linguistic competence, furthering the belief that language is uniquely human faculty. Project CETI challenges this assumption. Like humans, sperm whales mediate rich and intricate social structures, living in segregated clans with their own dialects of coda sequences (3). The sheer complexity of their acoustic behavior renders them the ideal species for this research (6). Still, it remains unclear whether sperm whales truly carry properties like grammar, syntax, and phonetics that are analogous to human language (1). As Project CETI develops, interspecies communication could considerably impact wildlife research and environmental studies. For now, however, Gruber states that Project CETI “is [more] about listening to the whales in their own setting, on their own terms. It’s the idea that we want to know what they’re saying—that we care” (1).

Bibliography

- Welch, C. (2021, April 19). Groundbreaking effort launched to decode whale language. National Geographic. Retrieved from https://www.nationalgeographic.com/animals/article/scientists-plan-to-use-ai-to-try-to-decode-the-language-of-whales.

- Andersen, R. (2024, February 24). How First Contact With Whale Civilization Could Unfold. The Atlantic. Retrieved from https://www.theatlantic.com/science/archive/2024/02/talking-whales-project-ceti/677549.

- Kolbert, E. (2023, September 4). Can We Talk to Whales? The New Yorker. Retrieved from https://www.newyorker.com/magazine/2023/09/11/can-we-talk-to-whales.

- Hardesty, L. (2017, April 14). Explained: Neural Networks? MIT News. Retrieved from https://news.mit.edu/2017/explained-neural-networks-deep-learning-0414.

- Petersson, D. (2023, August 8). CNN vs. RNN: How are they different? Tech Target. Retrieved from https://www.techtarget.com/searchenterpriseai/feature/CNN-vs-RNN-How-they-differ-and-where-they-overlap#:~:text=RNNs%20are%20better%20suited%20to,results%20back%20into%20the%20network.

- Bermant, P.C., Bronstein, M.M., Wood, R.J. et al. (2019, August 29). Deep Machine Learning Techniques for the Detection and Classification of Sperm Whale Bioacoustics. Scientific Reports. Retrieved from https://www.nature.com/articles/s41598-019-48909-4#citeas.

Comments are closed.